Tiny-ML for decentralized and energy-efficient AI

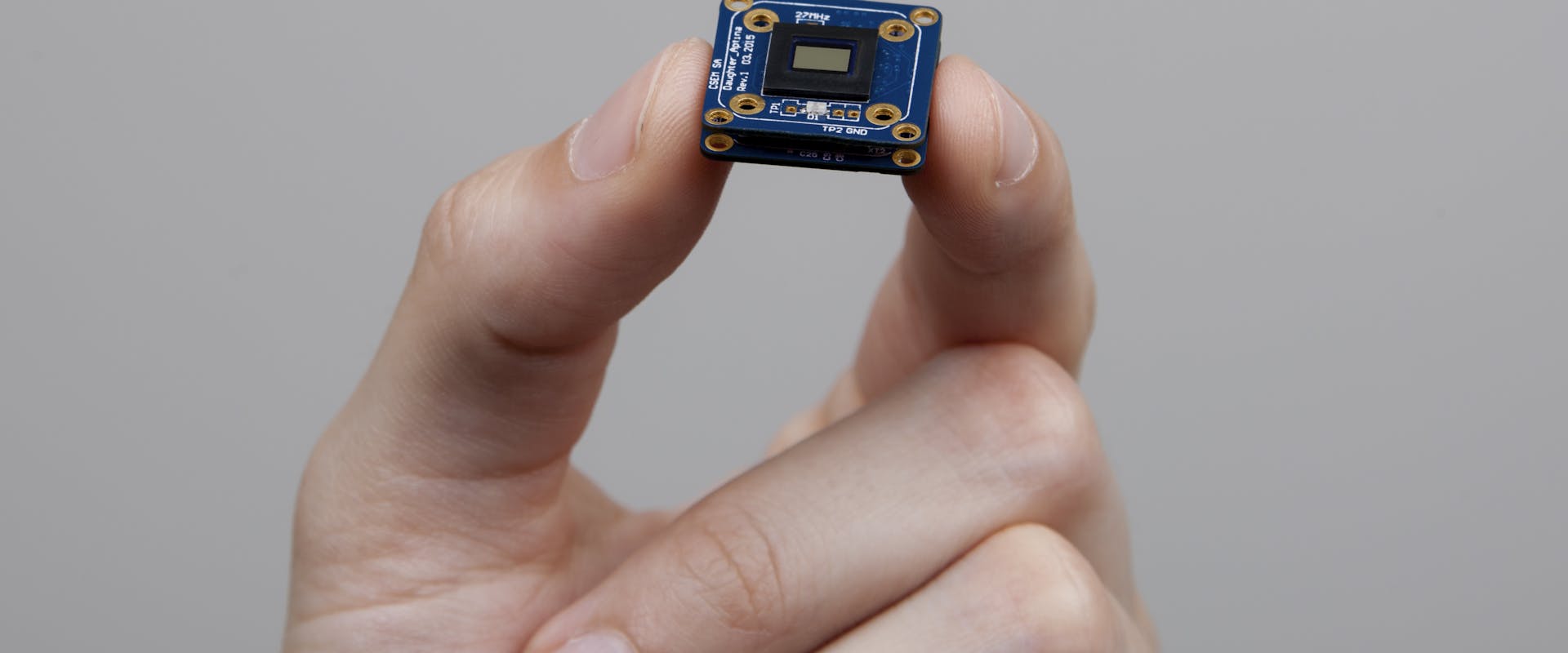

Artificial Intelligence (AI) is now part of our daily lives, embedded in connected wristbands and even smartphones. How is it possible to integrate such powerful algorithms into environments where every milliwatt counts? In this article, first published March 20, in French by ICT Journal Andrea Dunbar, Group Leader, Edge AI and Vision at CSEM and PhD candidate Simon Narduzzi present the advantages of TinyML to reduce the Cloud workload and tomorrow's challenges.

Mobile devices and the Internet of Things (IoT) enable new forms of interaction with the digital world. Through machine learning – the technique behind AI – objects become ‘smart’ and can process large amounts of information. Meanwhile, most connected objects still send data unprocessed to the Cloud, where it is analyzed by complex algorithms before the result is sent back to the user. This approach requires extensive infrastructure (networks, data centers); questions also arise about the reliability, speed, security, and confidentiality of data, not to mention the energy consumed for data communication and the Cloud infrastructure. TinyML (Tiny = small, ML = machine learning) is a domain of embedded computing that focuses on solutions to deploy machine learning solutions directly in connected objects, providing a secure and resource efficient alternative to cloud computing.

How Tiny-ML could reduce the Cloud workload

Cloud-based processing of data from connected devices requires extensive infrastructure to transmit, convert, and store the data. Therefore, storing and processing data in the end devices themselves (edge AI) can reduce infrastructure costs. In addition, intelligent end devices are often used in applications where decision speed plays a central role: for autonomous driving, for example, cloud-based data processing presents an unacceptable risk in safety-critical decisions. Serious consequences could occur if the connection is interrupted, or latency is too high. Furthermore, a connected system is a potential target for cyber-attacks.

Even in critical applications, such as monitoring a high-throughput production line, high data throughput is generated. Latencies represent a security risk and endanger production. End devices that can make decisions independently are therefore essential because they avoid costly downtime and prevent data from being viewed by third parties.

Finally, the energy required for data transmission and processing in the Cloud remains large: for streaming alone, 40% of the required energy is consumed by the mobile network. With over 30 billion connected objects expected by 2025, and against the backdrop of the climate and energy crisis, machine learning "on the edge" can significantly reduce the energy consumption associated with cloud data processing.

What makes TinyML so unique is its ability to use complex algorithms on devices with limited computing capacity and low power range: such devices are often operated in the tens of milliwatt range - in stark contrast to industrial neural networks running on graphics cards with a power requirement of about 300 W (which corresponds to a ratio of about 1:10,000). In recent years, TinyML has grown rapidly - especially in the integration of AI capabilities on microcontrollers and sensors. Very simple machine learning models that are optimized for operation on devices with low computing power are used.

Solutions for embedded machine learning

Recent R&D efforts in collaboration with industry have enabled the rapid implementation of new, energy-efficient deep learning algorithms and the development of new computational paradigms and learning techniques. These approaches are closely linked to hardware, as circuit board technologies must also be optimized for such algorithms to be usable. Some solutions are already available and widely used to reduce the size of models, especially to embed deep learning models for face recognition on smartphones or blood pressure tracking on connected watches and wristbands.

Among the various machine learning algorithms, neural networks are a very popular type of model that has been applied to a wide range of problems. Their success is mainly due to their ease of creation and their ability to offer acceptable performance even without prior specialized knowledge. In addition, pre-trained networks are easily accessible and available to the public on the Internet. Inspired by the biological nervous system, neural networks consist of layers of neurons connected to each other by virtual synapses, called weights or parameters of the neural network. These parameters determine how signals are transmitted from one layer to another. They are presented in the form of matrices and are optimized as neural networks learn to solve a problem.

In embedded computer systems, storage space is limited, and algorithms must be based on compact networks to be stored on the device. The factorization technique is particularly used to separate weight matrices and store them as vectors to drastically reduce the number of parameters. Another popular technique, quantization, involves changing the accuracy of the weights: from 32-bit floating-point numbers to integers with only 8 bits or even lower representation. Additionally, redundant parameters are identified and removed from the model. Under certain circumstances, an entire layer of neurons may be removed (known as pruning) without compromising performance. Another more elaborate technique involves training a smaller neural network (student) to imitate the predictions of a more complex neural network (teacher), and then using the resulting model on the chip. The "student" does not need to internalize all the "teacher's" knowledge, but only needs to fulfill its specific task. These different methods can be combined to achieve better results. In fact, this area is making rapid progress, with many new techniques and combinations being developed.

Development approaches and challenges

Despite these solutions, significant challenges remain, including improving the models’ accuracy and robustness, battery durability, and data privacy. The application of the above-mentioned techniques impacts the performance of the models, so current research focuses on mitigating this impact by improving the algorithms. Furthermore, it is now widely established that most of the energy budget for embedded systems is used for data transfer between memory and processor. Reducing energy consumption and thus improving the battery life of these systems is possible if data transfer is reduced by the architecture of the hardware. Intelligent memory, for example, can perform simple operations on data before forwarding it to the processor (in-memory computing).

The current trend is oriented towards the concept of so-called Non-Von Neumann architecture. In this paradigm, areas of memory and computation are mixed: new models such as spiking neural networks - models that are more like biological neural networks - will no longer store information in external memory but in the neurons and synapses of the network. Old concepts such as analog computing are also being revived by operating transistors in the subthreshold regime, which leads to enormous energy savings at the cost of less accurate results. Finally, the question is explored on how the new learning paradigms meet the requirements of data protection, as to how they will change the way we interact with the environment and the world around us, and how we can ensure that they are used ethically and responsibly. Approaches include training algorithms on distributed chips themselves, where objects either learn autonomously (online learning) or exchange minimal information with each other to help each other (federated learning).

In the future, objects could become energy and decision autonomous, where data remains at the periphery or is only shared with the Cloud when a deeper analysis is required. Connected objects will be able to learn independently and inform us in real time about the state of our environment. Intelligence will be present everywhere, distributed, and within reach. In this regard, the TinyML community must continue to improve the quality of the models while ensuring that they run smoothly on devices with particularly low power consumption. Using TinyML, we can benefit from a technology that is more respectful of our planet, while continuing to innovate and improve our daily lives.

* * *

A growing Swiss community

Intelligent TinyML objects exist at the interface of hardware and software. The development and qualification of these devices require new tools. The international organization TinyML Foundation was created to accelerate progress in this field and facilitate the development of new applications. TinyML has just opened a branch in Switzerland, consisting of members from industry and academia, such as CSEM, ETH Zurich, Logitech, SynSense, and Synthara. In 2023, publicly accessible events will take place, including technical presentations and workshops.

For more information, visit: www.tinyml.org